Nvidia is one of the biggest names in the industry right now, thanks to its significant strides in the artifical intelligence space. The company is showing no signs of losing its lead as generative AI explodes in popularity with tools like ChatGPT on the rise. Outside its consumer GPU business, Nvidia is also seeing huge success in the Cloud and Data Center space, by enabling high-performance AI computing with its DGX platform.

You may have heard of it, but what exactly is Nvidia DGX? Let’s learn more about the DGX platform, its benefits, and the kind of systems that are deployed as a part of a DGX enterprise solution.

Best AI applications: Tools that you can run on Windows, macOS, or Linux

If you want to play with some AI tools on your computer, then you can use some of these AI tools to do just that.

What is Nvidia DGX platform?

A complete enterprise solution for AI development

Nvidia DGX is a platform that integrates AI software and a line of servers and workstations that use GPGPU — short for general purpose graphics processing units — to enable and accelerate deep learning applications. Nvidia has successfully produced various DGX systems over the years, ranging from the DGX Server-1 that dates all the way back to 2016, to more modern and advanced systems like the DGX B200.

Enterprise customers can choose to take advantage of the DGX platforms either via the cloud using the Nvidia DGX Cloud solution, or they can even choose to deploy on-premise data centers with DGX systems that are built from the ground up using high-performance components from Nvidia and its partners.

There are plenty of DGX systems in use today across various data centers around the world, and the scale of purpose-built hardware used in them ranges from a small unified platform like the DGX B200 to a DGX SuperPOD with multiple DGX GB200 systems. Nvidia has a long and ever-growing list of high-value customers like Shell and BMW, that have successfully deployed DG systems.

A comprehensive AI platform for enterprise

A complete software and purpose-built hardware solution

Nvidia’s DGX platform is powered by the company’s custom software called the Nvidia Base Command, which it says is the operating system of its DGX data centers. It’s a part of the Nvidia DGX platform, and the company says it provides everything businesses need for AI development and training. It leverages Nvidia AI Enterprise and Nvidia’s support solutions to facilitate enterprise-grade AI training. Think of it as a one-stop solution to monitor AI training modules and other jobs that are seamlessly performed over an on-premise data center or via DGX Cloud. Below are some key features of Nvidia Base Command software:

- Facilitates single-GPU, multi-GPU, and multi-node AI training

- Includes APIs for MLOps integration

- Built-in data set management and data governance

Nvidia DGX systems overview

Nvidia’s latest DGX B200 unified AI platform

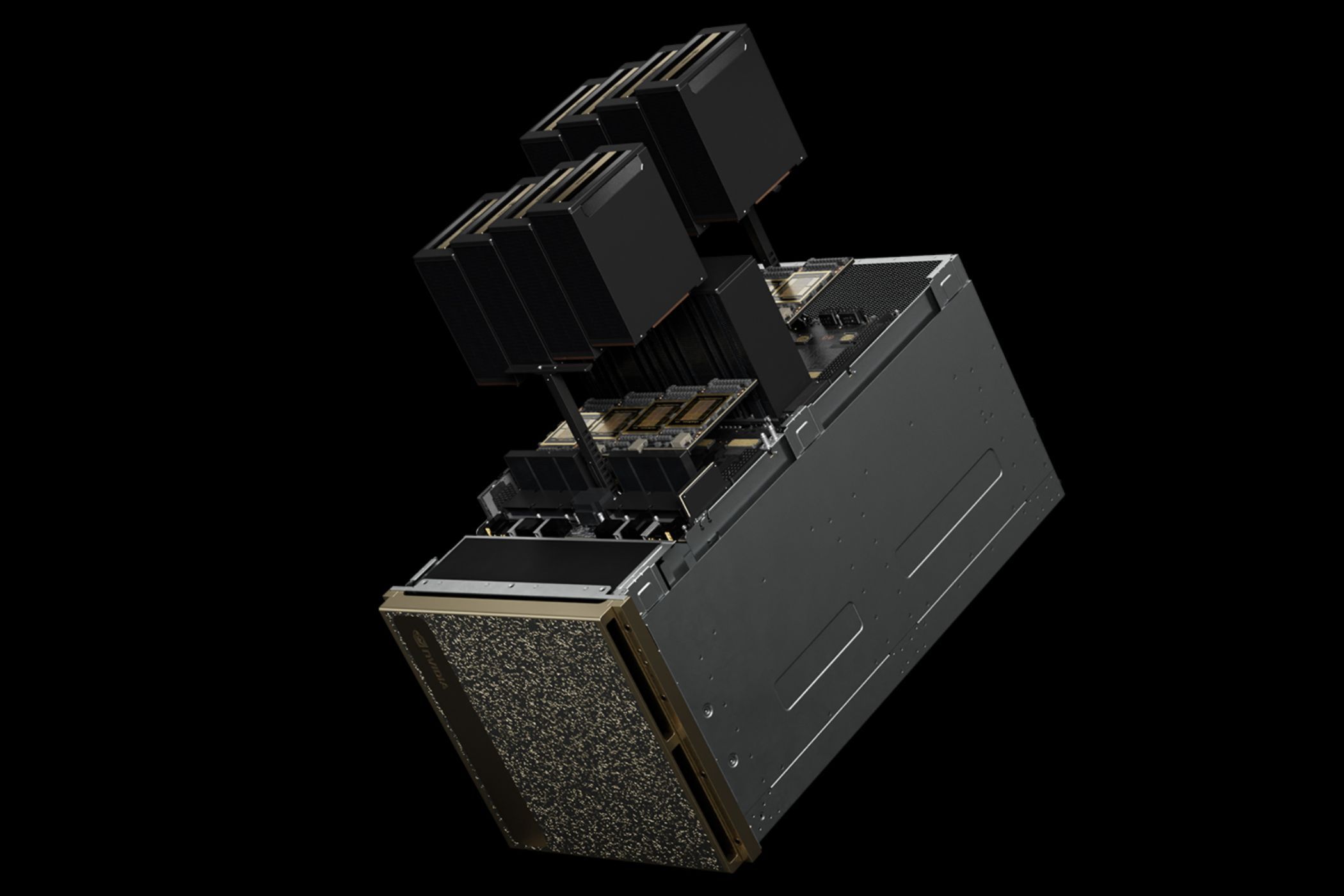

Nvidia’s DGX systems, in case you are wondering, are based on a rackmount chassis design, incorporating a mainboard, high-performance x86 CPUs, and a cluster of GPUs. The latest DGX platform from Nvidia comes in the form of the DGX B200, featuring as many as eight Nvidia B200 Tensor Core GPUs that come together to act as “one giant GPU” with 1.4TB of GPU memory space.

Key features of the Nvidia DGX B200 unified system:

- Built with eight Nvidia B200 Tensor Core GPUs.

- 1.4TB of GPU memory space

- 72 petaFLOPS of training and 144 petaFLOPS of inference performance

- Dual 5th generation Intel Xeon Scalable processors

- Acts as the foundation of Nvidia’s latest DGX BasePOD and DGX SuperPOD

- Includes Nvidia AI Enterprise and Nvidia Base Command software

Each of these DGX systems can be incorporated into an AI factory using AI Supercomputers that act as a purpose-built AI engine which is always available for machine learning applications. The Nvidia DGX SuperPOD with DGX GB200 system, which is built for training and inferencing trillion-parameter generative AI models, incorporates liquid-cooled racks featuring 36 Nvidia GB200 Grace Blackwell Superchips, each with 36 Nvidia Grace CPUs and 72 Blackwell GPUs. These Superchips are connected with an Nvidia NVLink chip, and multiple racks are connected together with Nvidia Quantum InfiniBand, scaling thousands of GB200 Superchips.

FAQ

Q: What is HGX?

Nvidia HGX — short for Nvidia Hyperscale Graphics Extension — is a platform for OEMs that are building systems using Nvidia GPUs and interfaces. The latest one from Nvidia is the HGX B200 server board that links eight B200 GPUs through NVLink to support x86-based generative AI platforms. It uses NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet networking platforms to support networking speeds up to 400Gb/s.

Q: What are DGX-1 and DGX-2?

These are rack-mounted server systems built with an Intel Xeon CPU, eight GPUs, 512GB RAM and 2TB storage. The DGX -1 system was launched back in 2016 and it used Nvidia’s Pascal or Volta microarchitecture. The DGX-2 systems, on the other hand, came out in 2018, and used 16 Volta-based graphics procesing units.

Q: What is a DGX SuperPOD?

Nvidia’s DGX SuperPOD is a milti million-dollar datacenter platform comprising liquid-cooled racks containing a cluster of DGX systems. Nvidia’s new DGX SuperPOD with DGX GB200 systems features 36 Nvidia GB200 Grace Blackwell Superchips that are connected with NVLink.