Meta AI has this week introduced its new next-generation AI Training and Inference Accelerator chips. With the demand for sophisticated AI models soaring across industries, businesses will need a powerful and reliable computing infrastructure to keep pace. Meta’s accelerator could be the answer, providing enterprise applications with the tools to tackle even the most complex AI workloads with ease. Boasting more than double the compute and memory bandwidth of its predecessor, this innovative technology is engineered to optimize ranking and recommendation models, ensuring that you can deliver personalized, high-quality content to your users at breakneck speeds.

Last year, Meta unveiled the Meta Training and Inference Accelerator (MTIA) v1, its first-generation AI inference accelerator that we designed in-house with Meta’s AI workloads in mind – specifically Meta’s deep learning recommendation models that are improving a variety of experiences across the companies range of products.

Key Takeaways

- Meta have shared details about the next generation of the Meta Training and Inference Accelerator (MTIA), its family of custom-made chips designed for Meta’s AI workloads.

- This latest version shows significant performance improvements over MTIA v1 and helps power Meta’s ranking and recommendation advertising models.

- MTIA is part of Meta’s growing investment in our AI infrastructure and will complement our existing and future AI infrastructure to deliver new and better experiences across its products and services.

The impact of Meta’s next-generation AI accelerator extends far beyond the realm of theoretical possibilities. As you explore the vast potential of this technology, you’ll discover that it’s already making waves in real-world applications. Deployed in data centers across the globe, the accelerator is powering production-ready models, showcasing its ability to handle the demands of today’s AI-driven world.

While specific pricing details remain under wraps, the significance of this innovation cannot be overstated. Meta’s commitment to pushing the boundaries of AI computing is evident in the accelerator’s availability and readiness for deployment. As you embark on your own AI journey, you can trust that Meta’s technology will be there to support you every step of the way.

A Deep Dive into the Specifications

To truly appreciate the capabilities of Meta’s next-generation AI accelerator, it’s essential to explore its impressive specifications:

- Cutting-edge technology: Built on the TSMC 5nm process, ensuring optimal performance and efficiency.

- Impressive frequency: Operating at 1.35GHz, enabling lightning-fast computations.

- Robust instances: Featuring 2.35B gates and 103M flops, providing ample resources for complex AI models.

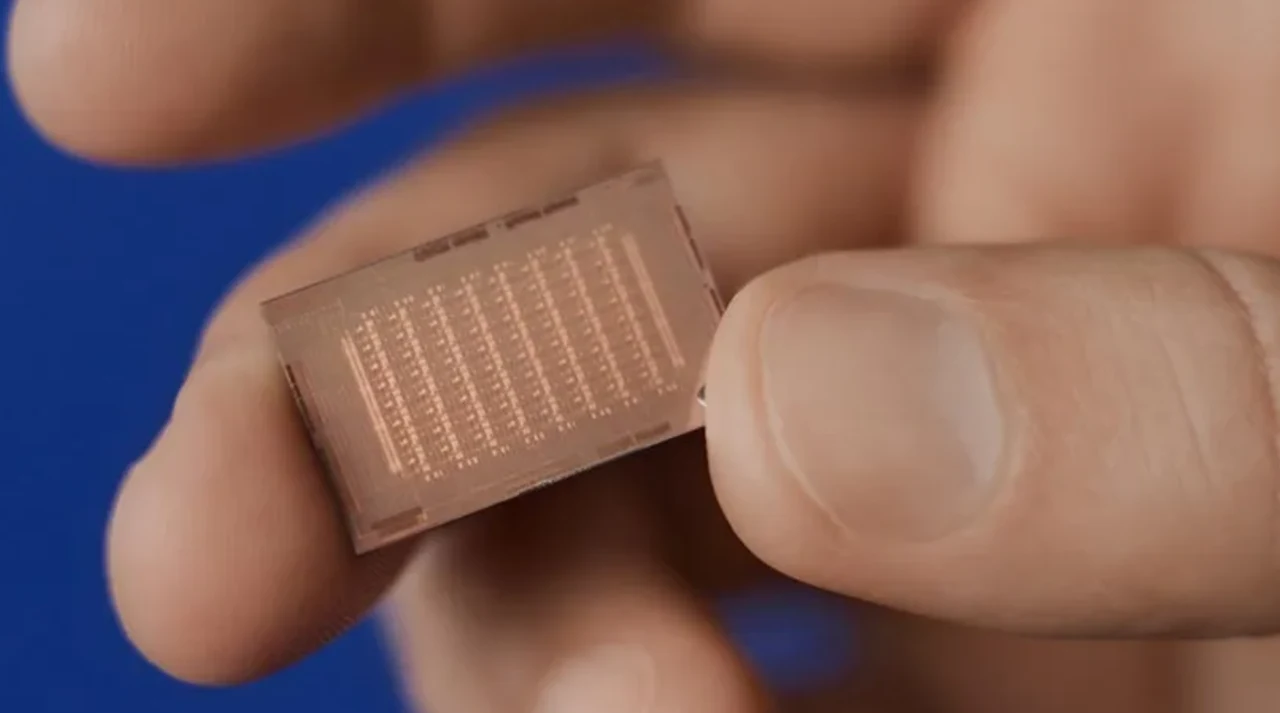

- Compact design: Measuring just 25.6mm x 16.4mm, with a total area of 421mm2, making it ideal for space-constrained environments.

- Efficient packaging: Housed in a 50mm x 40mm package, ensuring optimal thermal management and reliability.

- Low voltage operation: Running at 0.85V, minimizing power consumption without compromising performance.

- Thermal design power (TDP): Rated at 90W, striking the perfect balance between performance and energy efficiency.

- High-speed host connection: Equipped with 8x PCIe Gen5, providing a bandwidth of 32 GB/s for seamless data transfer.

- Unparalleled GEMM TOPS: Delivering up to 708 TFLOPS/s (INT8) with sparsity, enabling lightning-fast matrix multiplications.

- Impressive SIMD TOPS: Offering up to 11.06 TFLOPS/s (INT8), facilitating efficient vector operations.

- Extensive memory capacity: Boasting 384 KB local memory per PE, 256 MB on-chip, and 128 GB off-chip LPDDR5, ensuring ample storage for even the most demanding AI models.

- Exceptional memory bandwidth: Providing 1 TB/s local memory per PE, 2.7 TB/s on-chip, and 204.8 GB/s off-chip LPDDR5, guaranteeing rapid data access and processing.

Embracing the AI Revolution

Meta’s next-generation accelerator serves as your gateway to endless possibilities and is just the beginning of a thrilling journey that will reshape industries and redefine the way we interact with machines. From generative AI to advanced research, the applications of this accelerator are limitless. Meta’s unwavering commitment to pushing the boundaries of AI computing, through custom silicon, memory bandwidth, and networking capacity, ensures that you’ll always have access to the most advanced tools and resources.

Filed Under: Technology News, Top News

Latest TechMehow Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, TechMehow may earn an affiliate commission. Learn about our Disclosure Policy.